🔥 PyTorch: It's Python on FIRE! 🔥

PyTorch is an open source machine learning library that works directly with Python that to make neural network modeling quick and painless. As soon as I met PyTorch, I knew immediately that it was going to join the collection of my absolute most favorite libraries, along with NumPy, Pandas, and the rest of the machine learning and deep learning crew. The fact that I love deep learning plays heavily in this equation. But aside from that bias, I can will exhibit here some of the immense utility, time saving, and functionality that PyTorch offers, and it is mindblowing.

For example, PyTorch has built-in functions, using torch.cuda, that allow users to run model training on GPUs rather than CPUs, thus greatly improving training times, which is no small favor. And within Google Colab notebooks, we can quickly set up a GPU with this feature.

Another perk to using PyTorch for neural networks is its Autograd feature, which is a huge benefit to the model training process with PyTorch. During the backpropogation portion of the training process, Autograd calculates and stores the gradients for each of the parameters of the model within the .grad attribute. This is a whole topic in and of itself and deserves a presentation all its own. Consequently, it is by far one of PyTorch's greatest boasts!

⇢ There are numerous other benefits to using PyTorch. I will be focusing on the following five functions here:

torch.randn()andtorch.randn_like()torch.narrow()tensor.view()torch.select()torch.clone()- and a bonus function at the end!

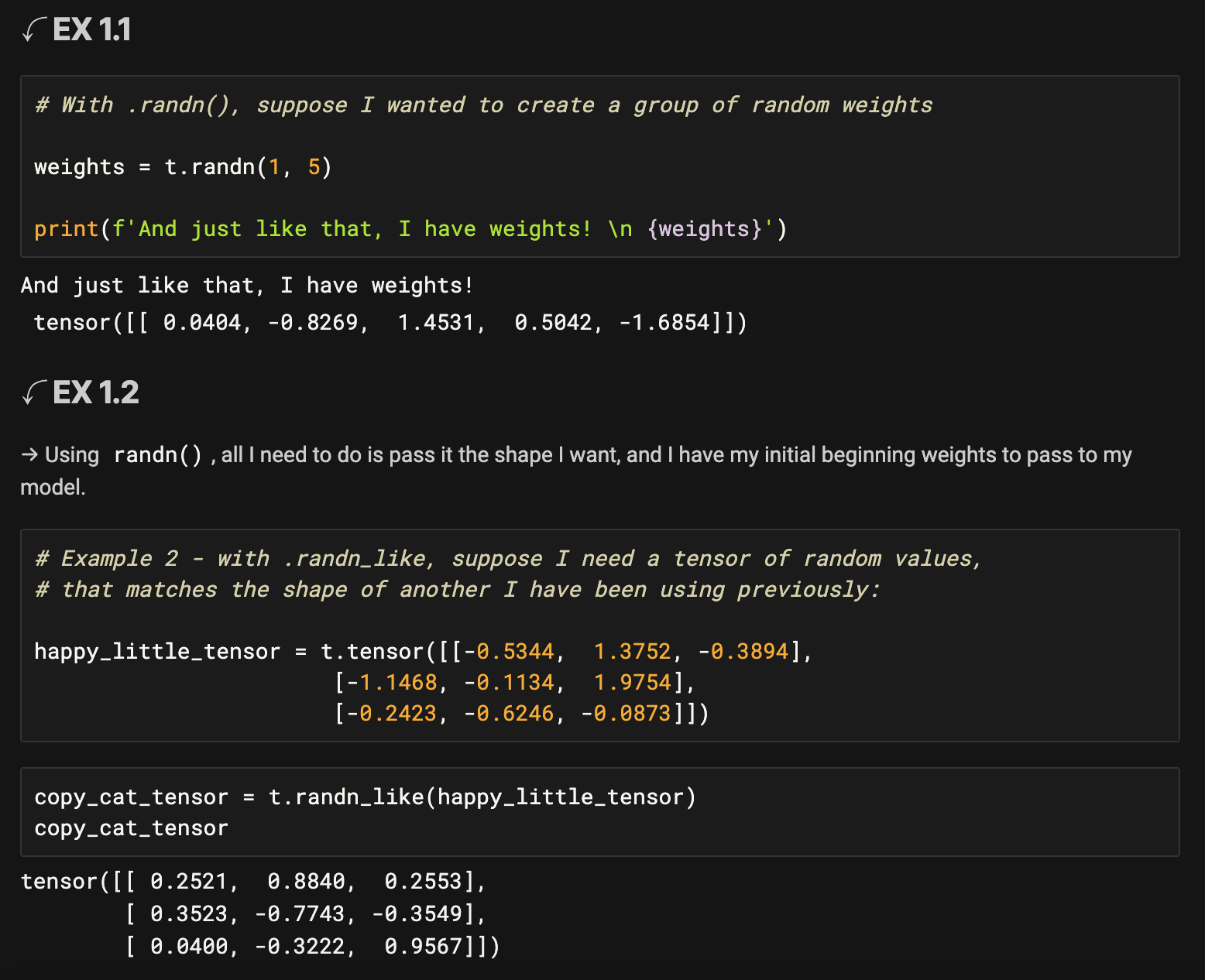

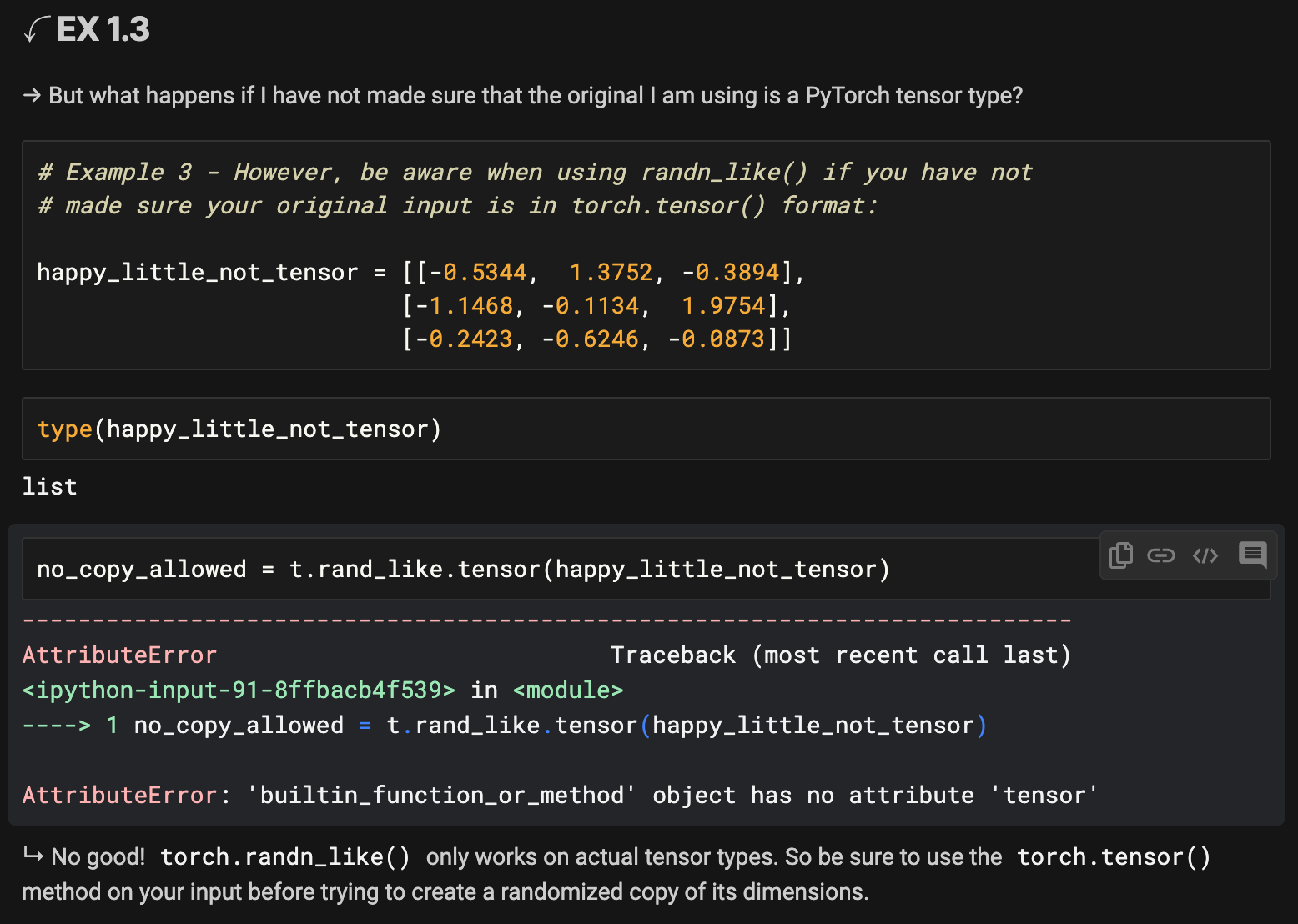

In the example above, I started out with an original tensor with dimensions (3, 3) and created a tensor using torch.randn_like() to create a tensor of the same dimensions as the original but filled with random values from a normal distribution.

➤ Function 1 Summary:

I find this function to extremely important, considering its value in the creation of random initial weights for training a model. These functions shorten code considerably and make it fast and easy to perform numerous operations that require random values.

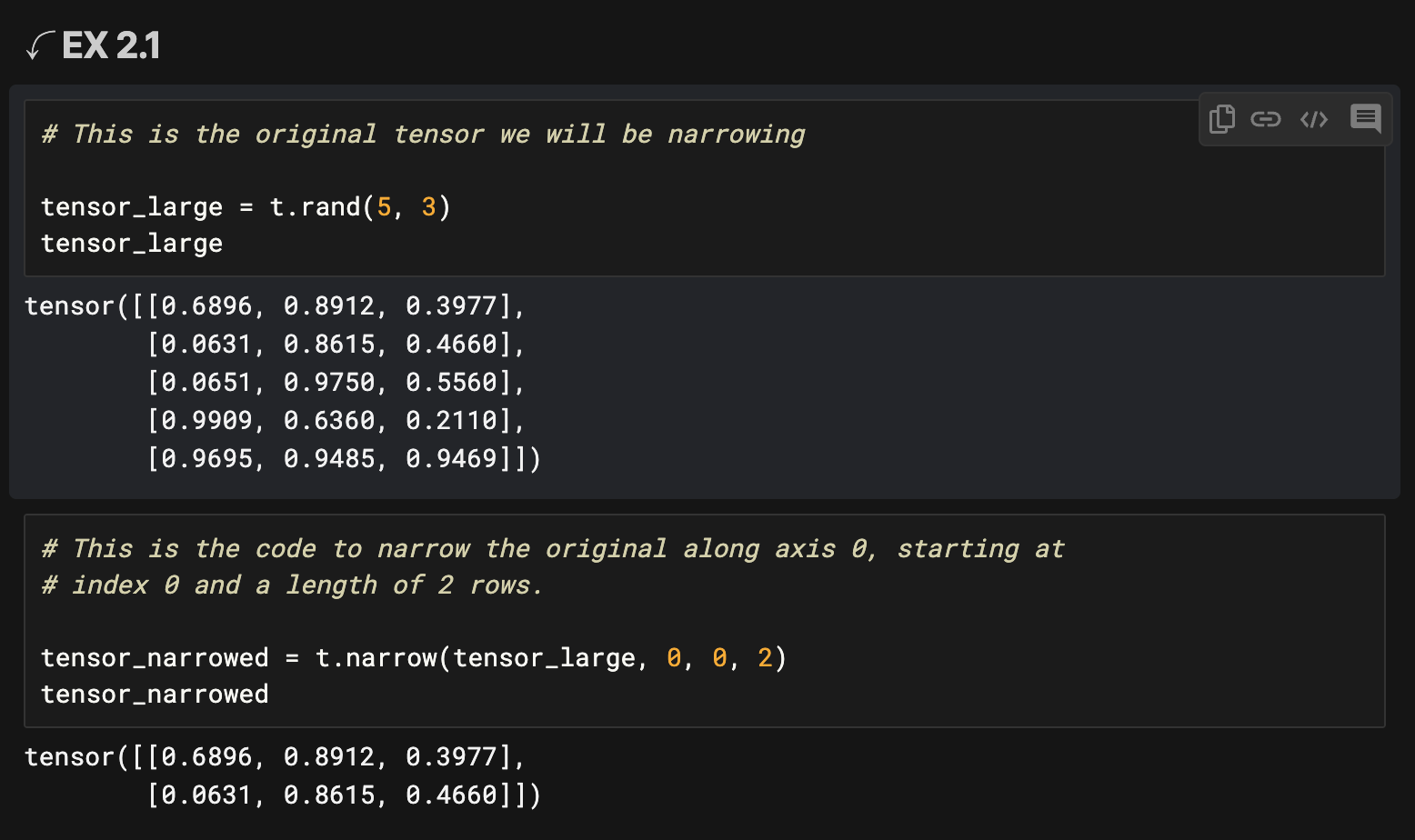

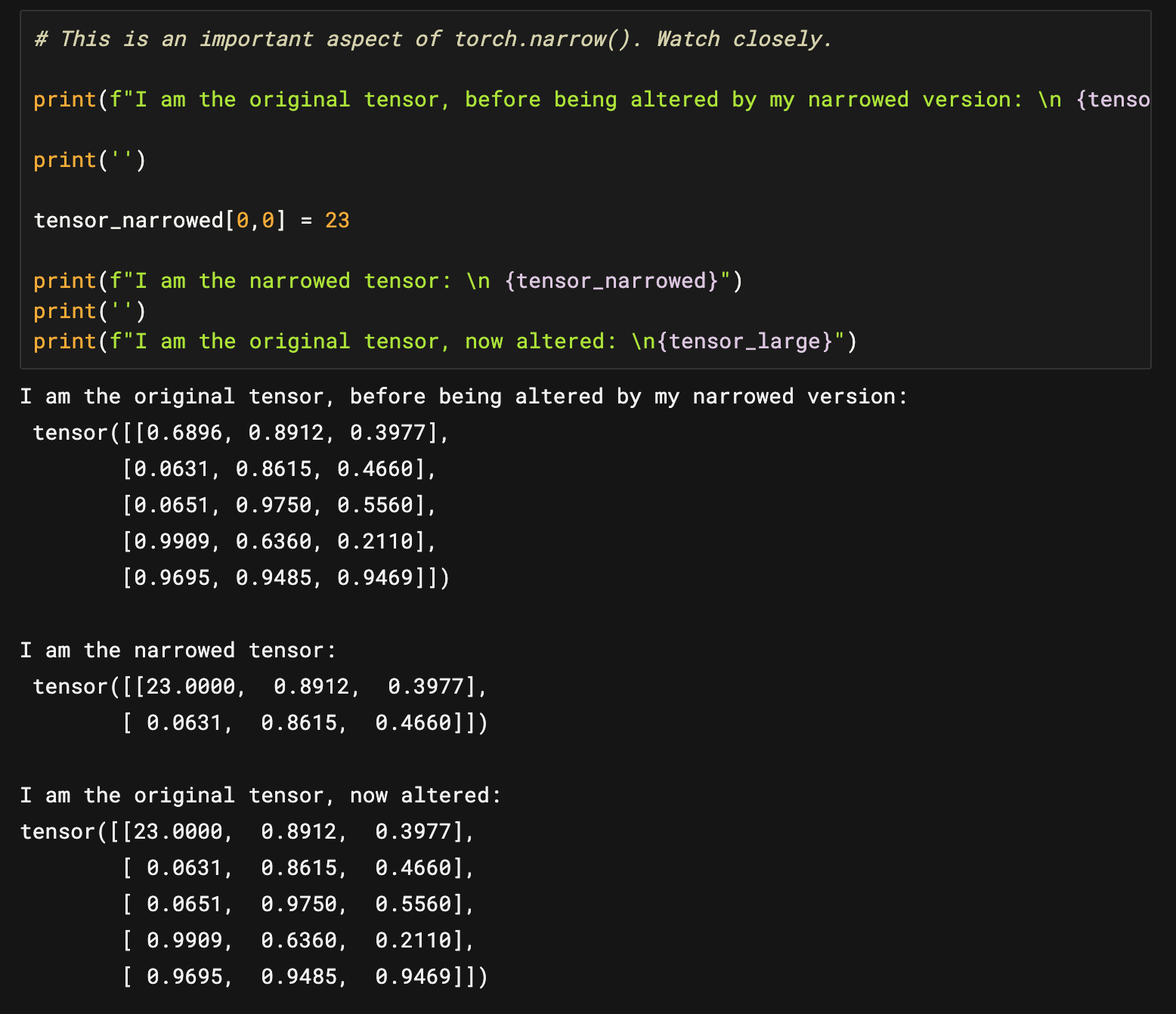

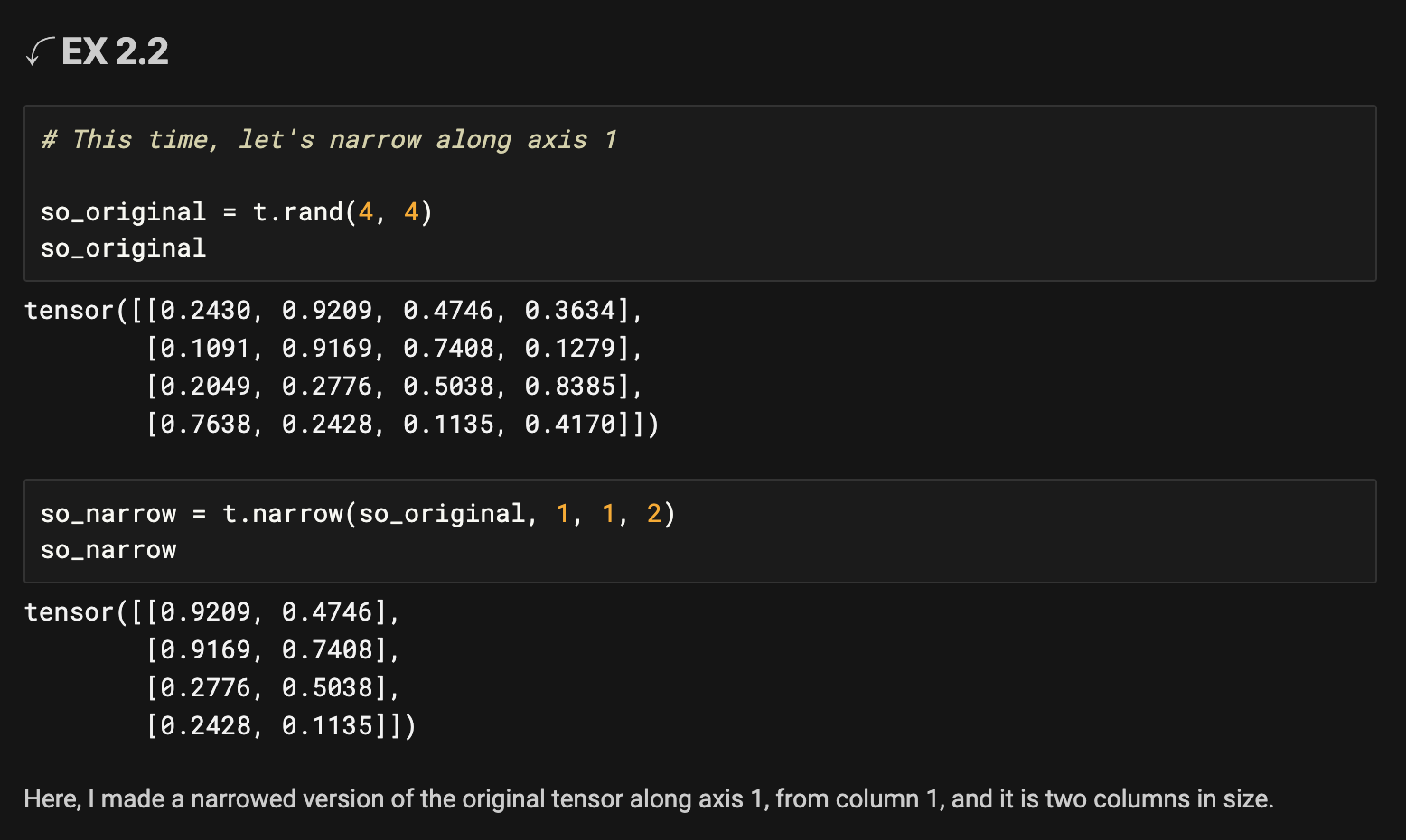

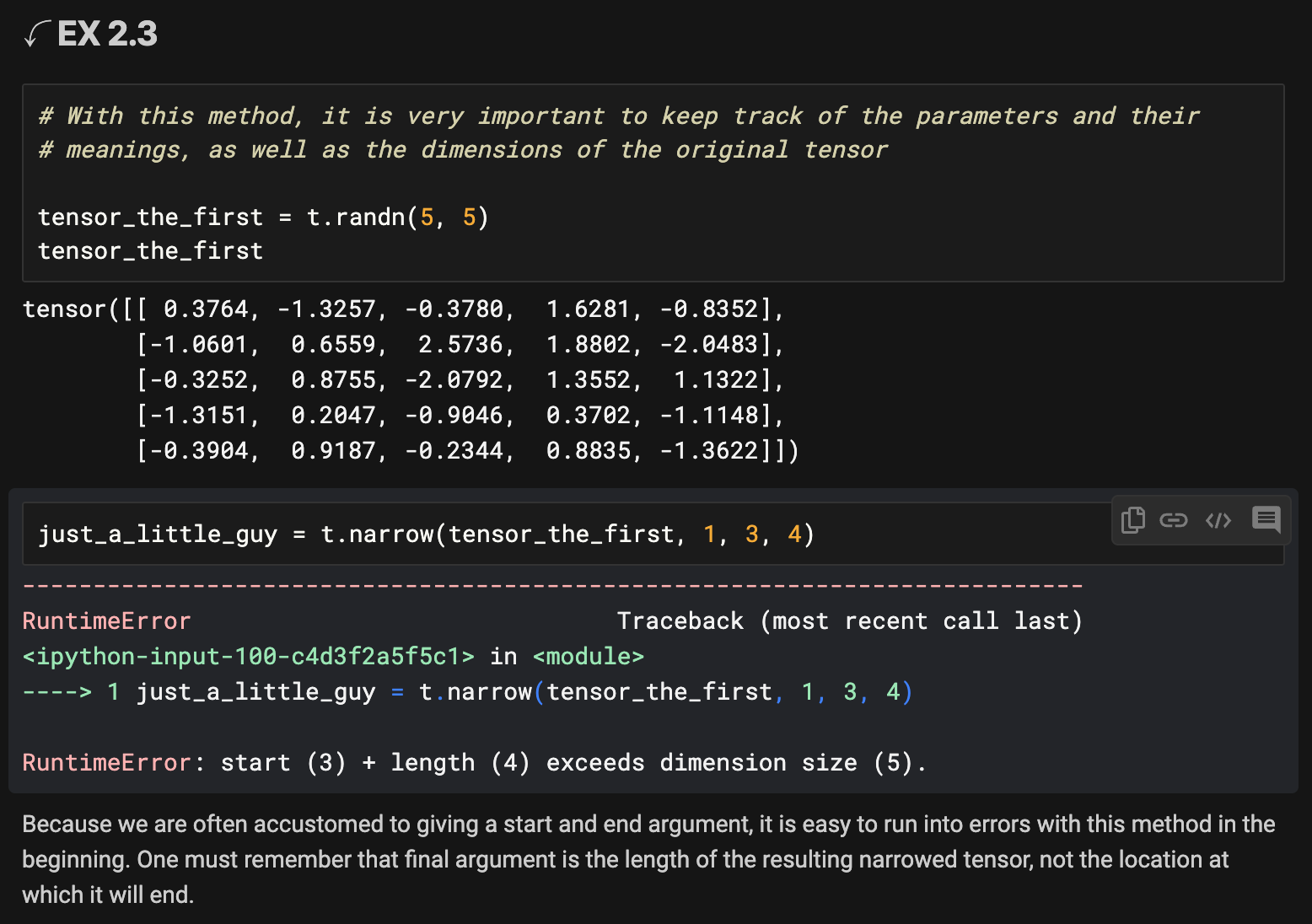

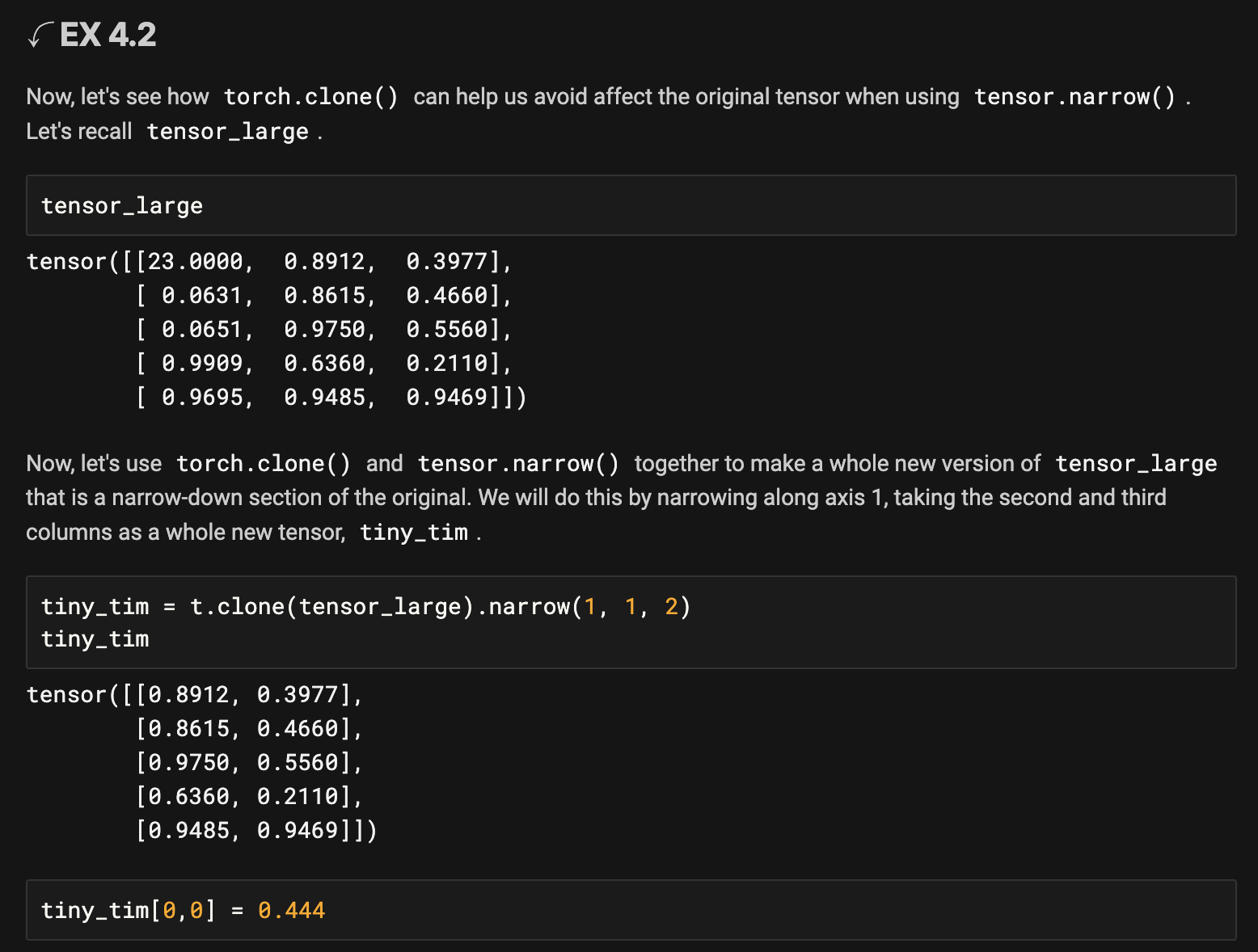

It is important to keep in mind that the narrowed versions of the tensor, when mutated, will pass on that change to the original. The two share the same space in memory and are not unique entities.

➤ Function 2 Summary:

torch.narrow() can be very useful in extracting specific sections of a tensor for use in operations that you do not intend to apply to a larger tensor. Just remember that last argument is the length of the narrowed version and not the end point.

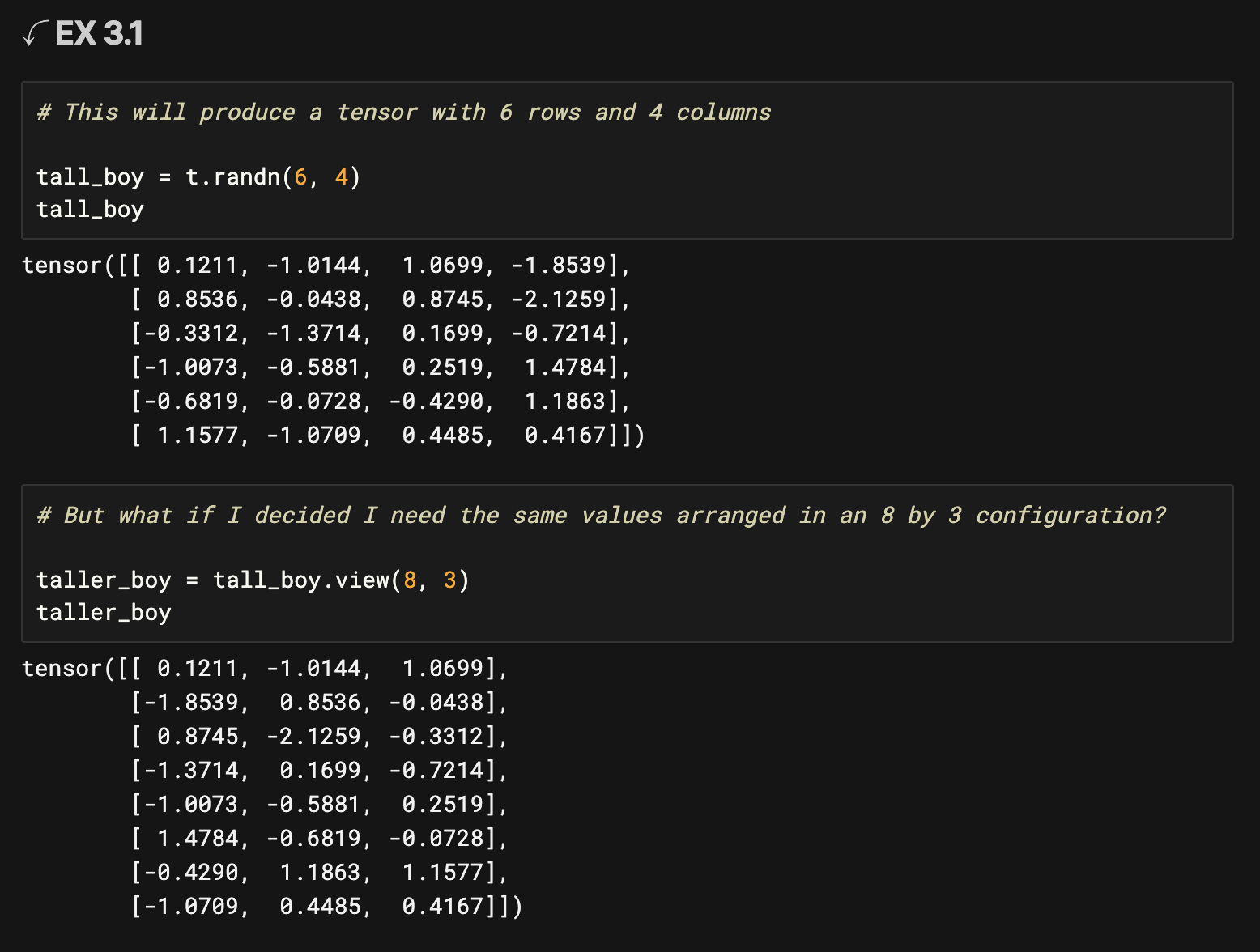

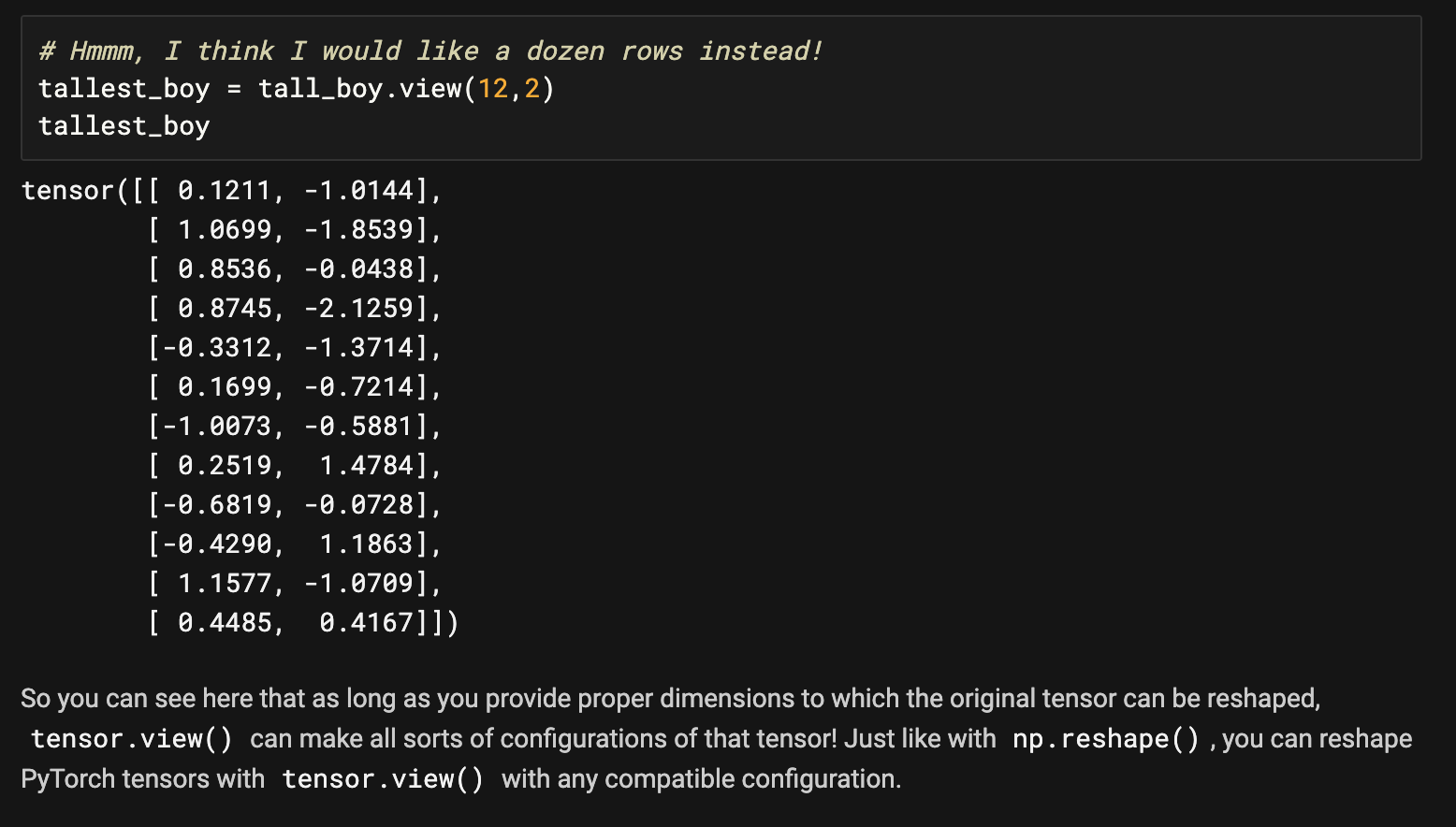

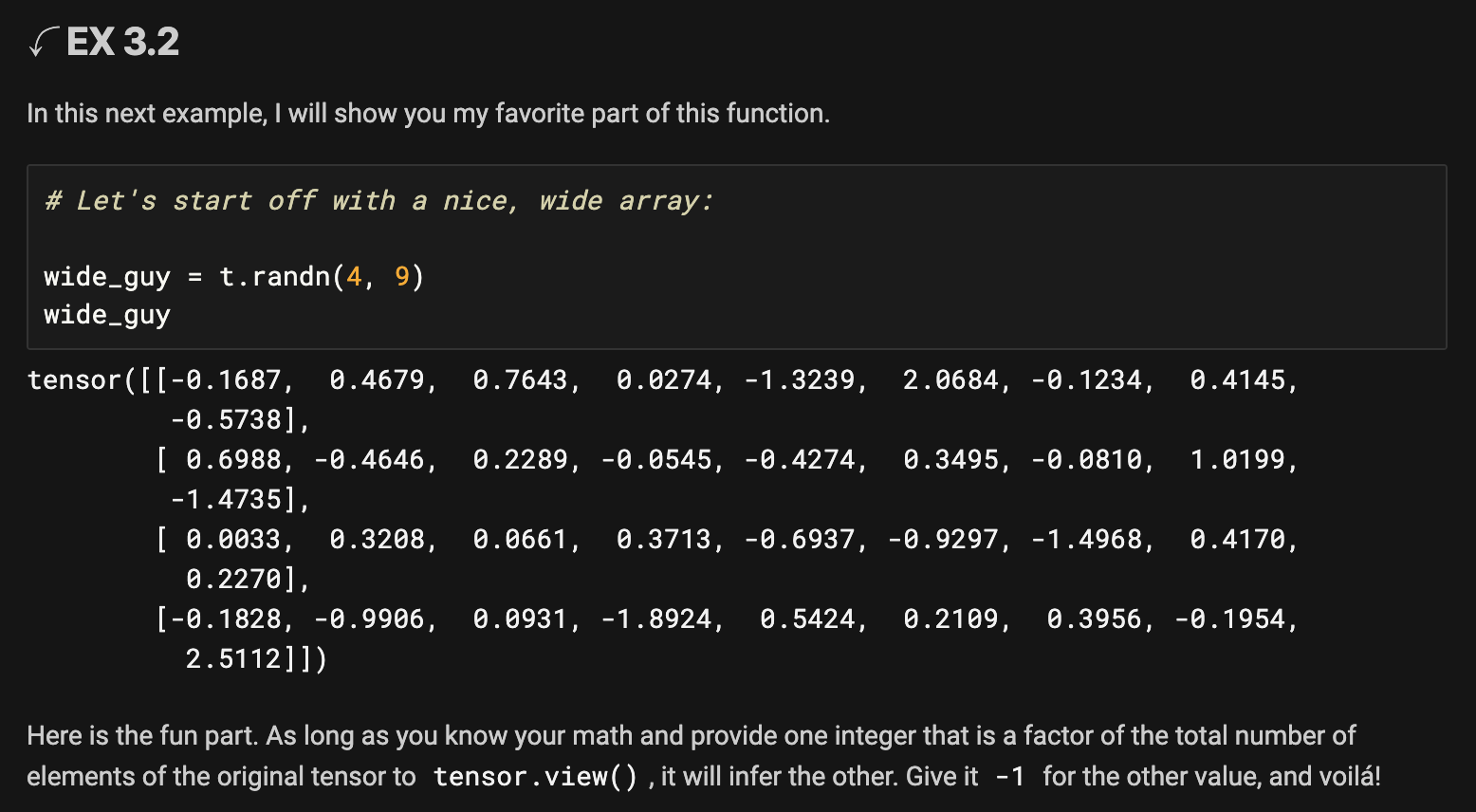

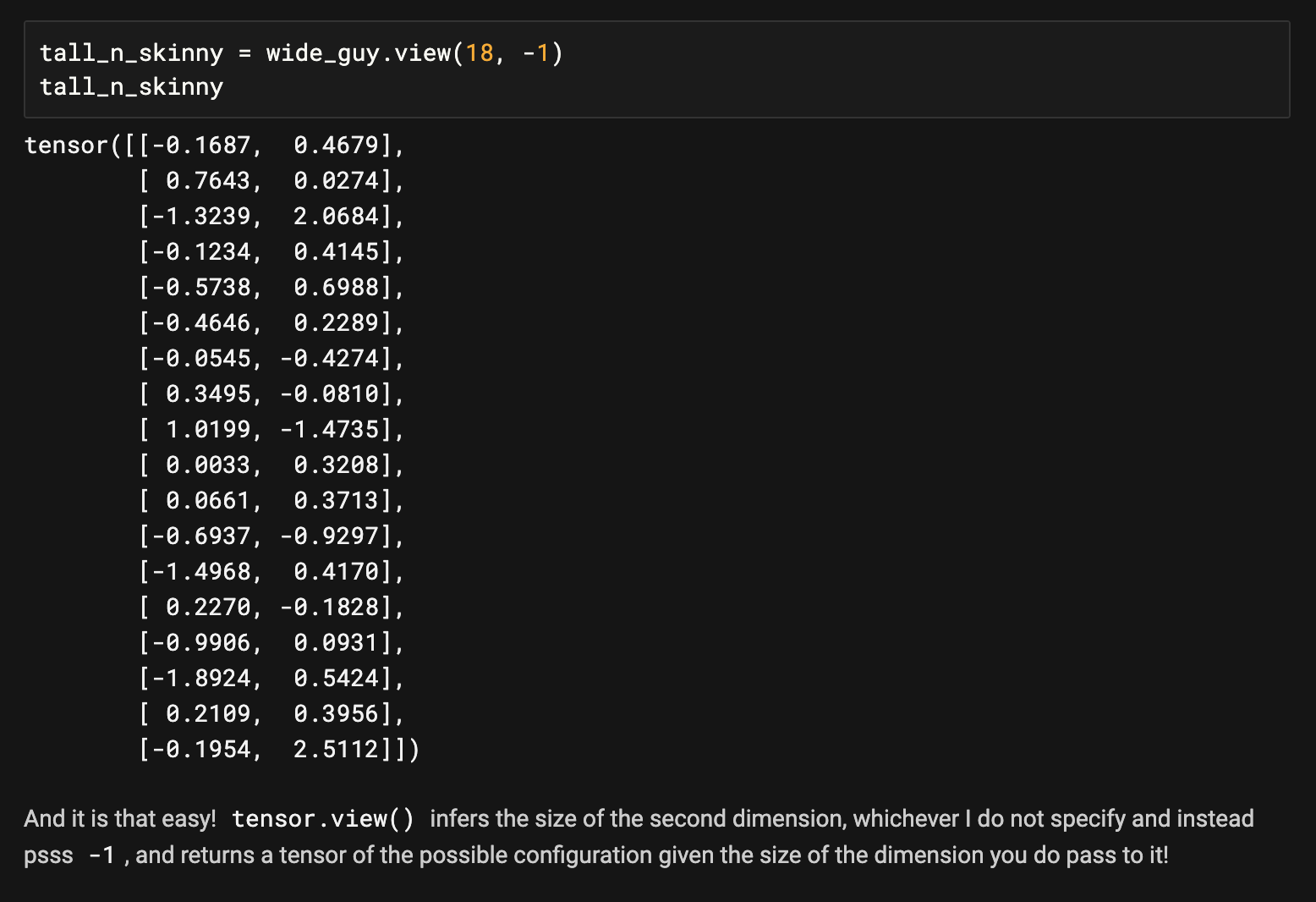

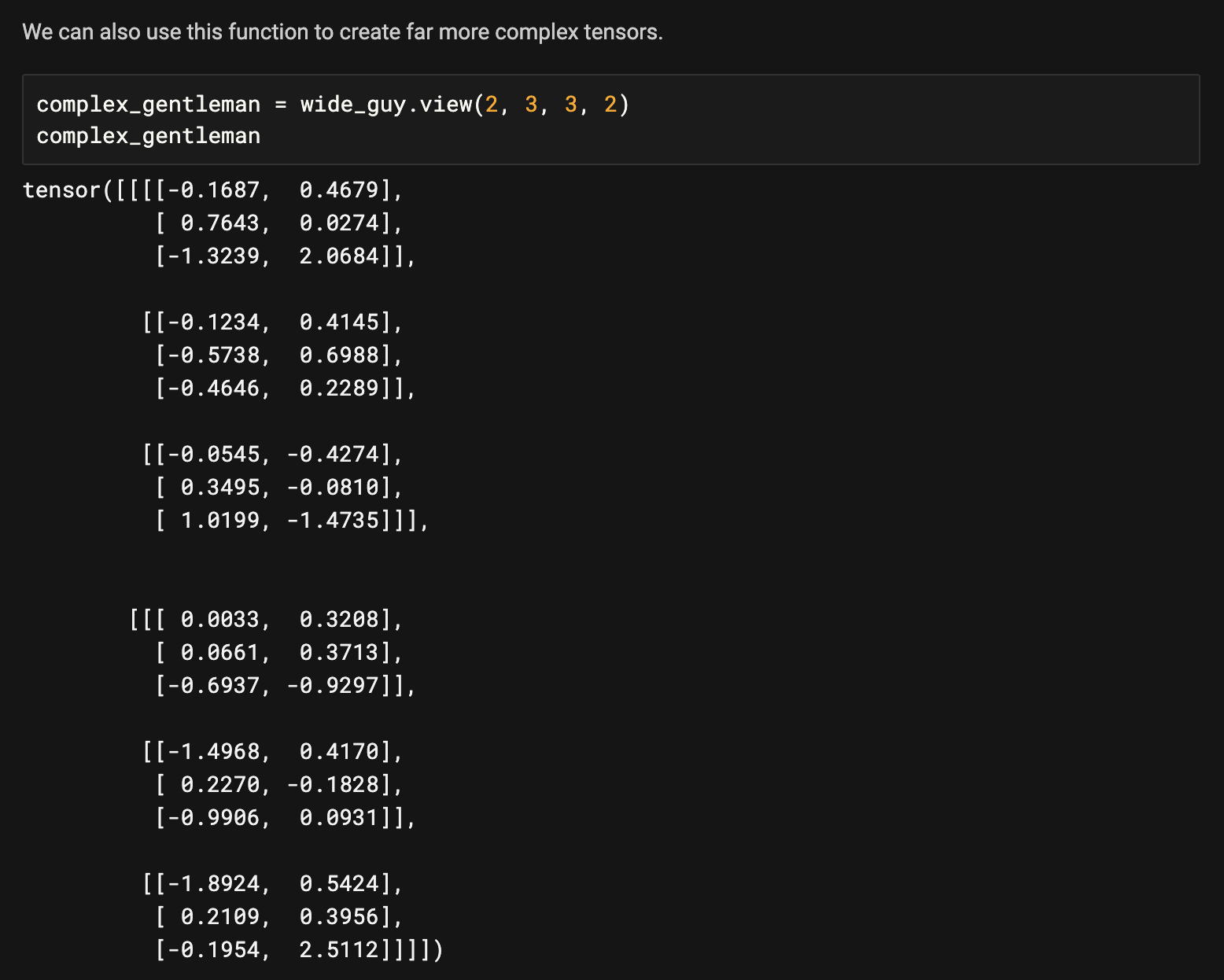

One aspect to keep in mind is that the tensors made from the original with tensor.view() are just that. They are views of the tensor. So any changes to the tensors created with tensor.view() will also be changes to the original tensor. See the example below.

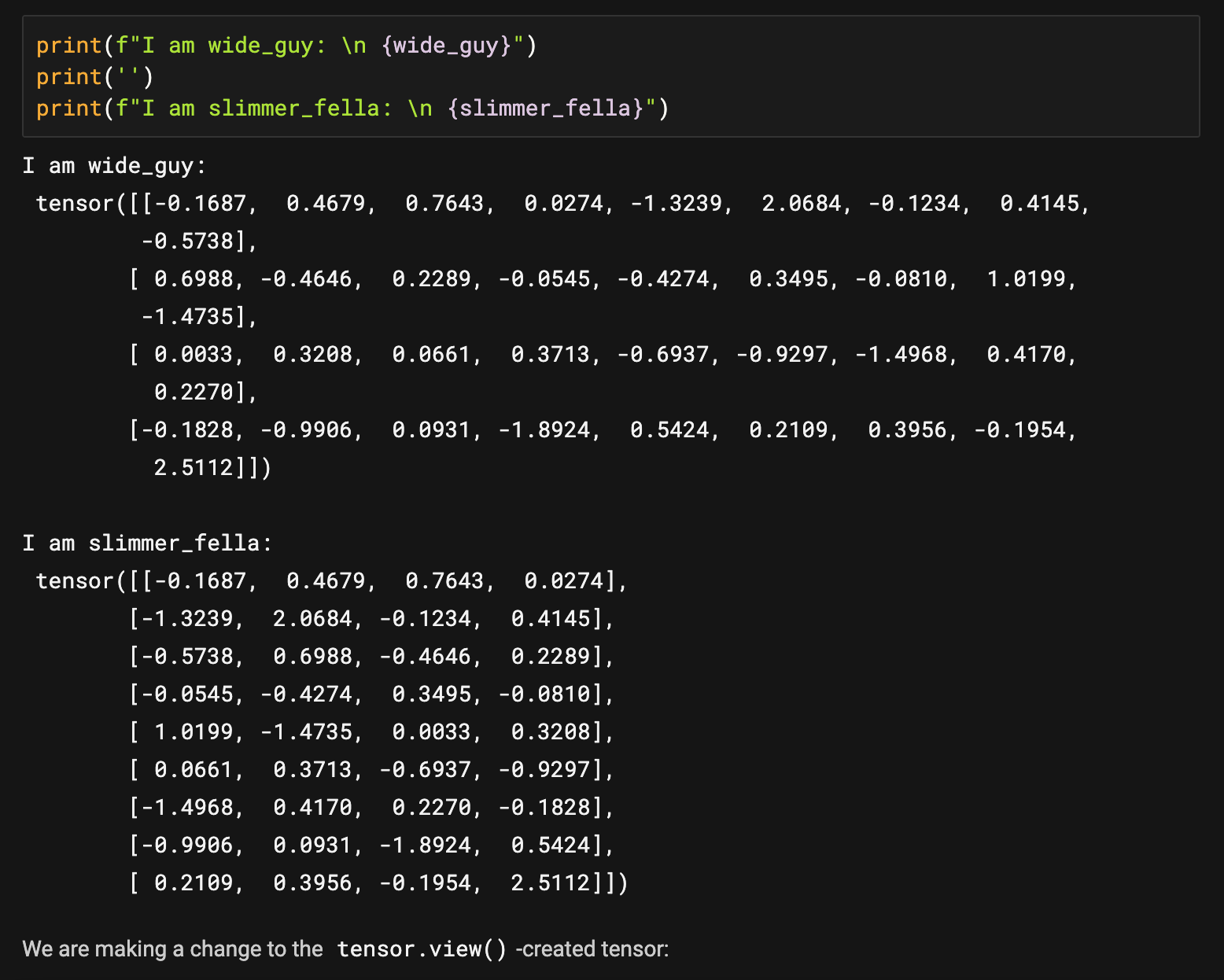

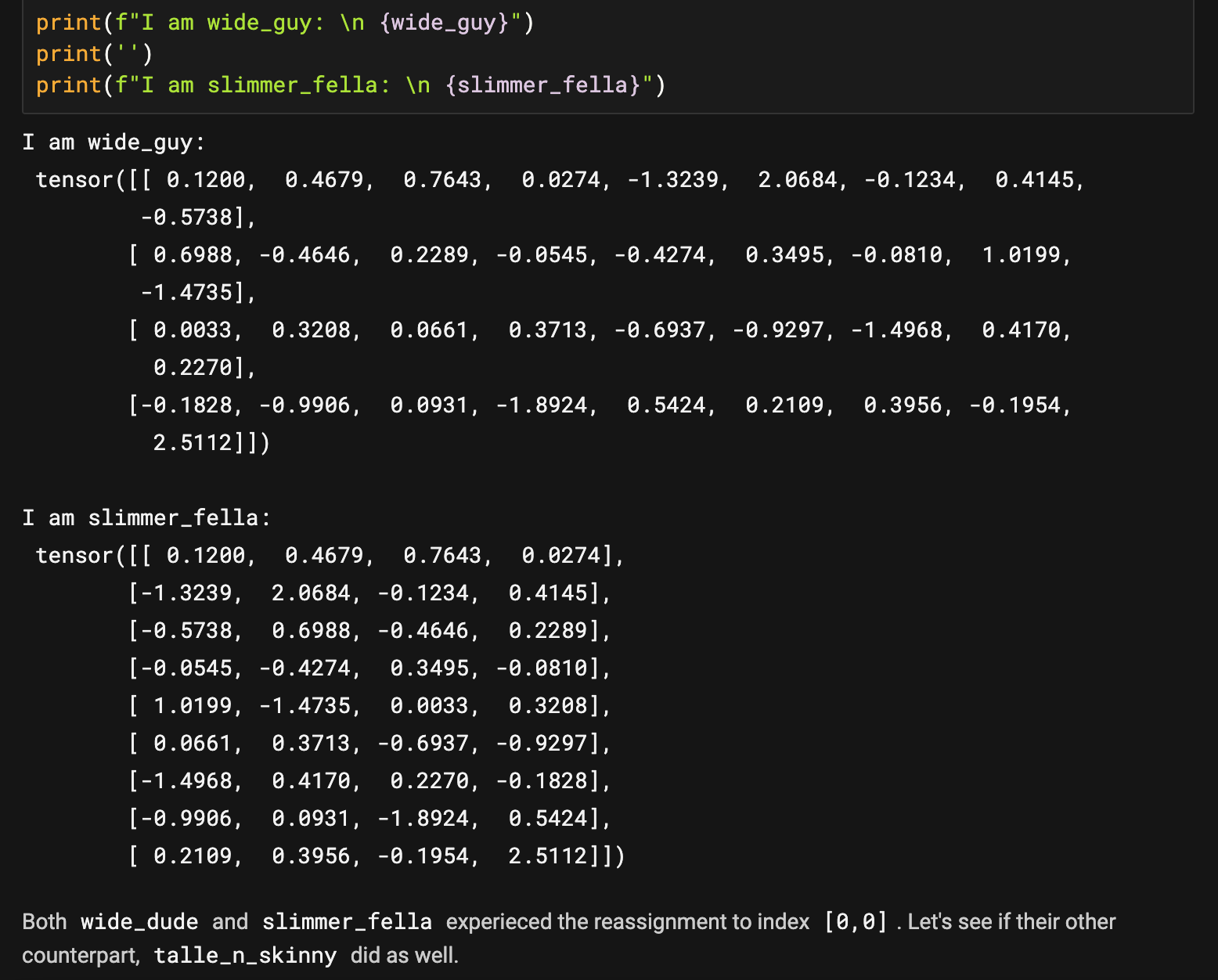

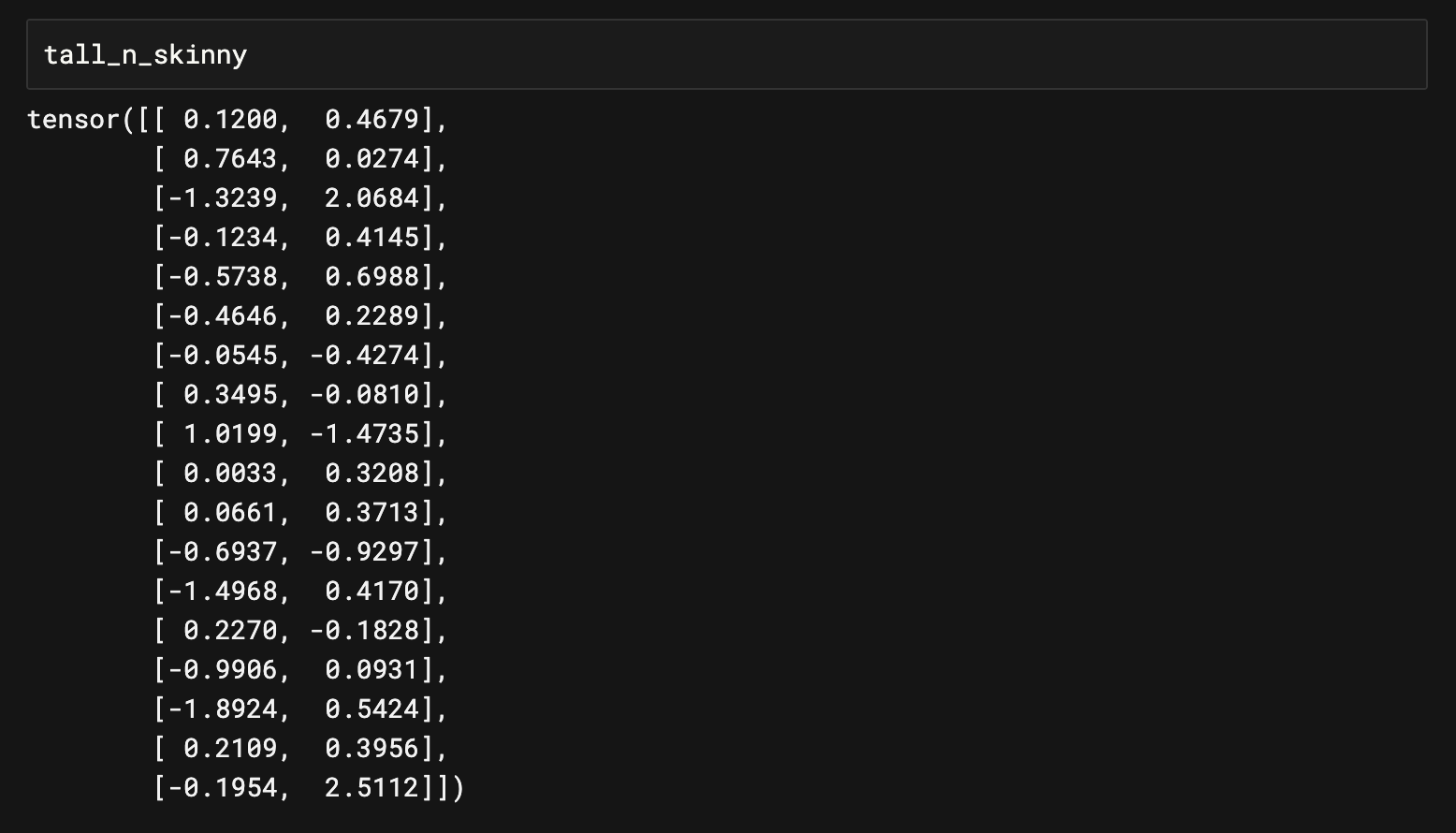

Let's take wide_guy and slimmer_fella from above, the first two tensors in this section.

So, as you see, every tensor "made" with tensor.view() is just a view thereof. If we want a true copy, then we will need to use the function presented as function 4 below, torch.clone(). But first, let's see how easy it is to go wrong with tensor.view().

➤ Function 3 Summary:

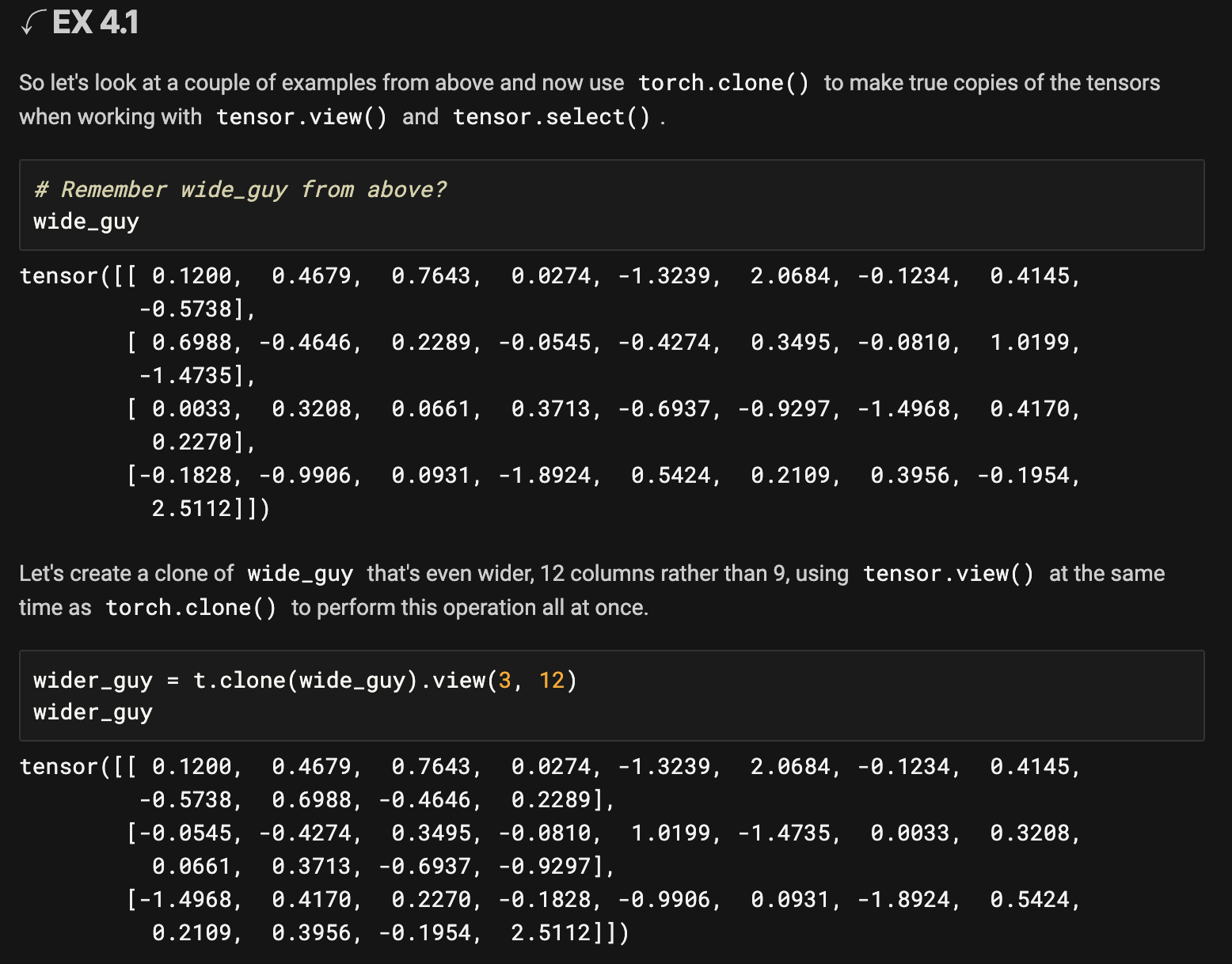

tensor.view() can be used in various ways to mutate and recreate tensors. It is easy to see how useful this method truly is thanks to its versatility! Now let's look at what we can do if we want to be able to use tensor.view() along with another great method that will help us to create tensors that do not affect the original.

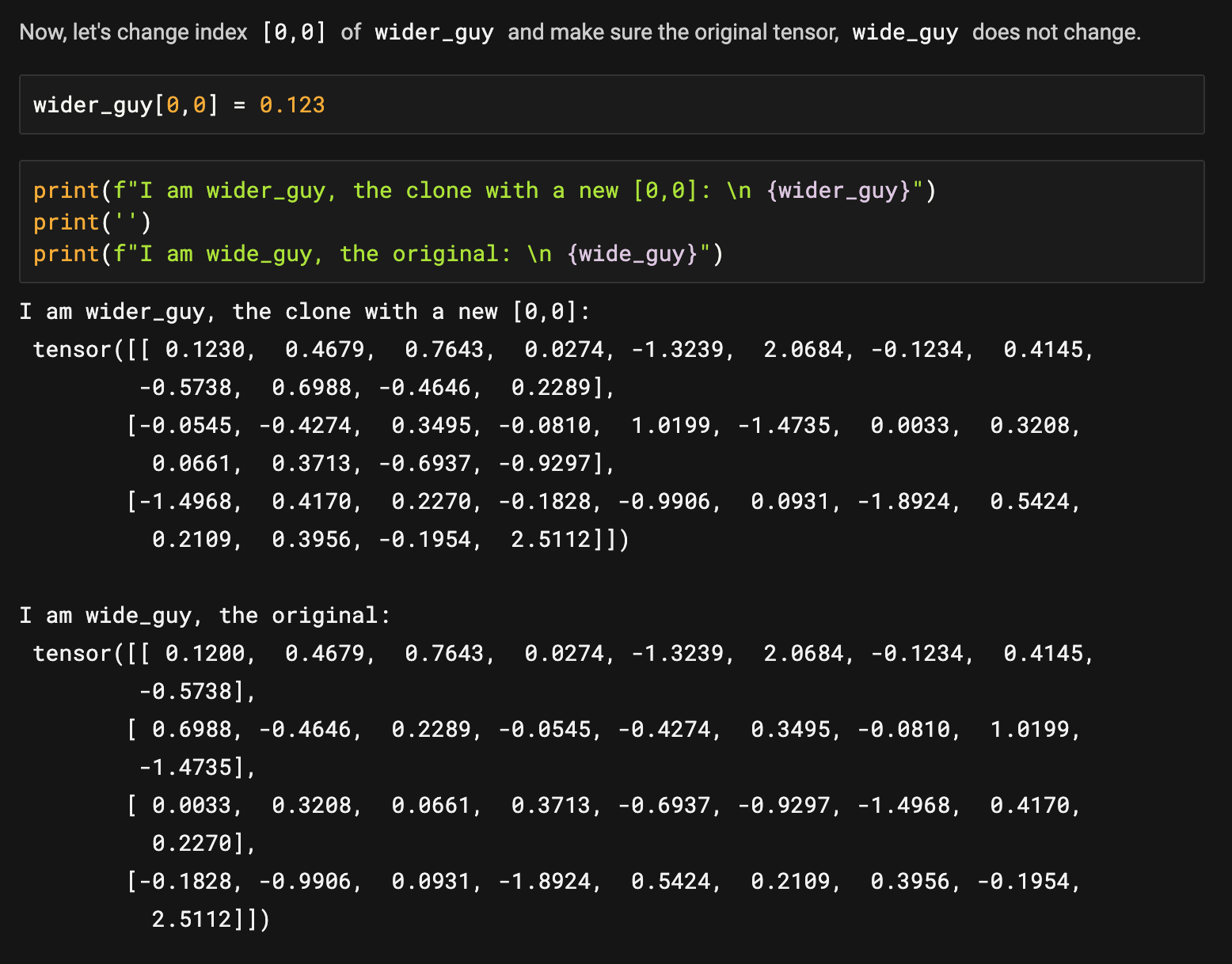

How lovely! torch.clone() did its job beautifully and worked in the same line of code with tensor.view()! So we have the original tensor, wide_guy that is a (4,9) tensor, and wider_guy that is a (3,12) tensor. And wider_guy is an entirely new tensor, and any changes to it will not affect the original.

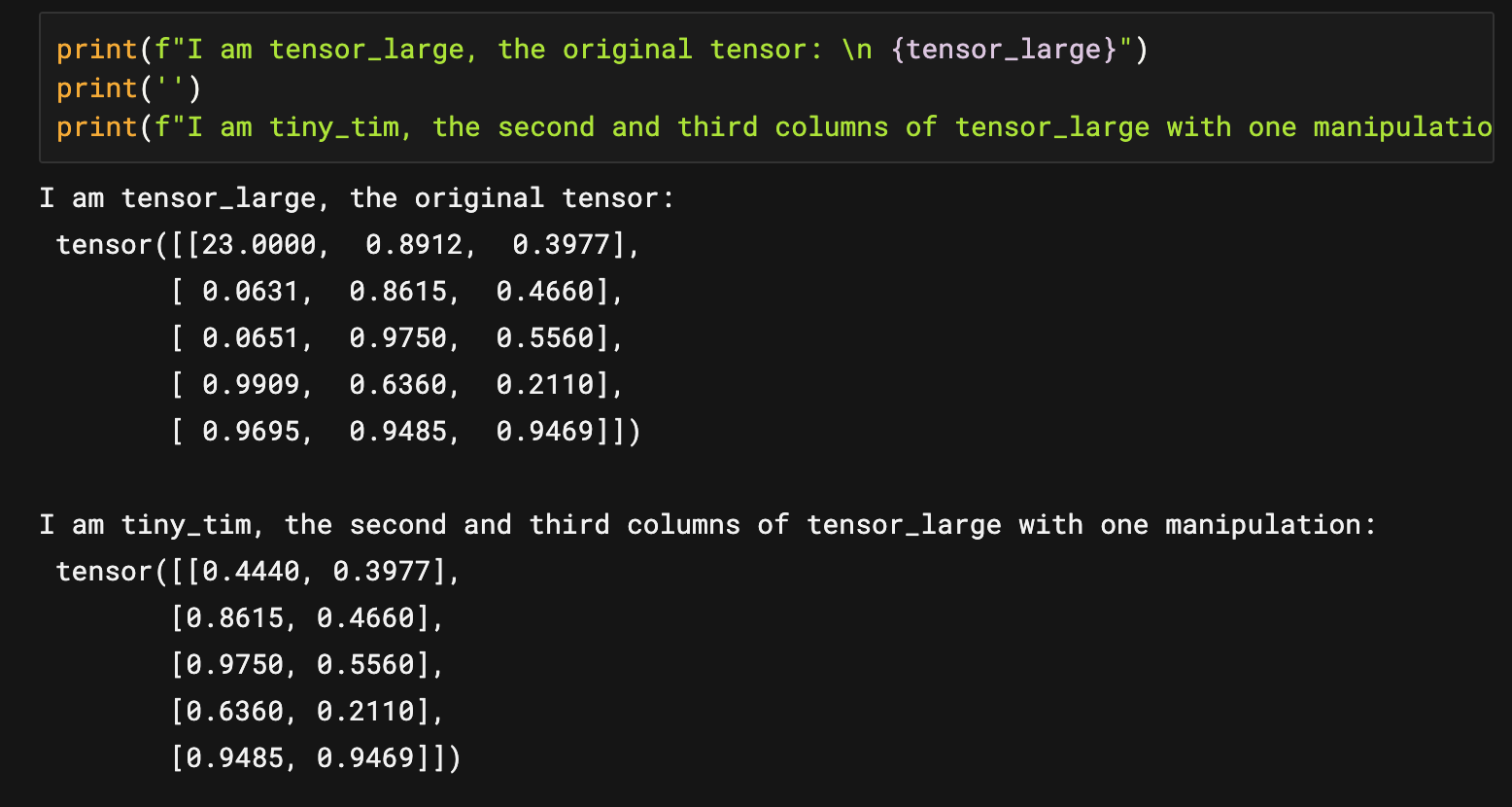

And as you can see, tiny_tim index [0,0] is now 0.4440, and tensor_original[0,1], which is the corresponding element to [0,0] in tiny_tim, has not changed along with tiny_tim index [0,0]. GO CLONE!

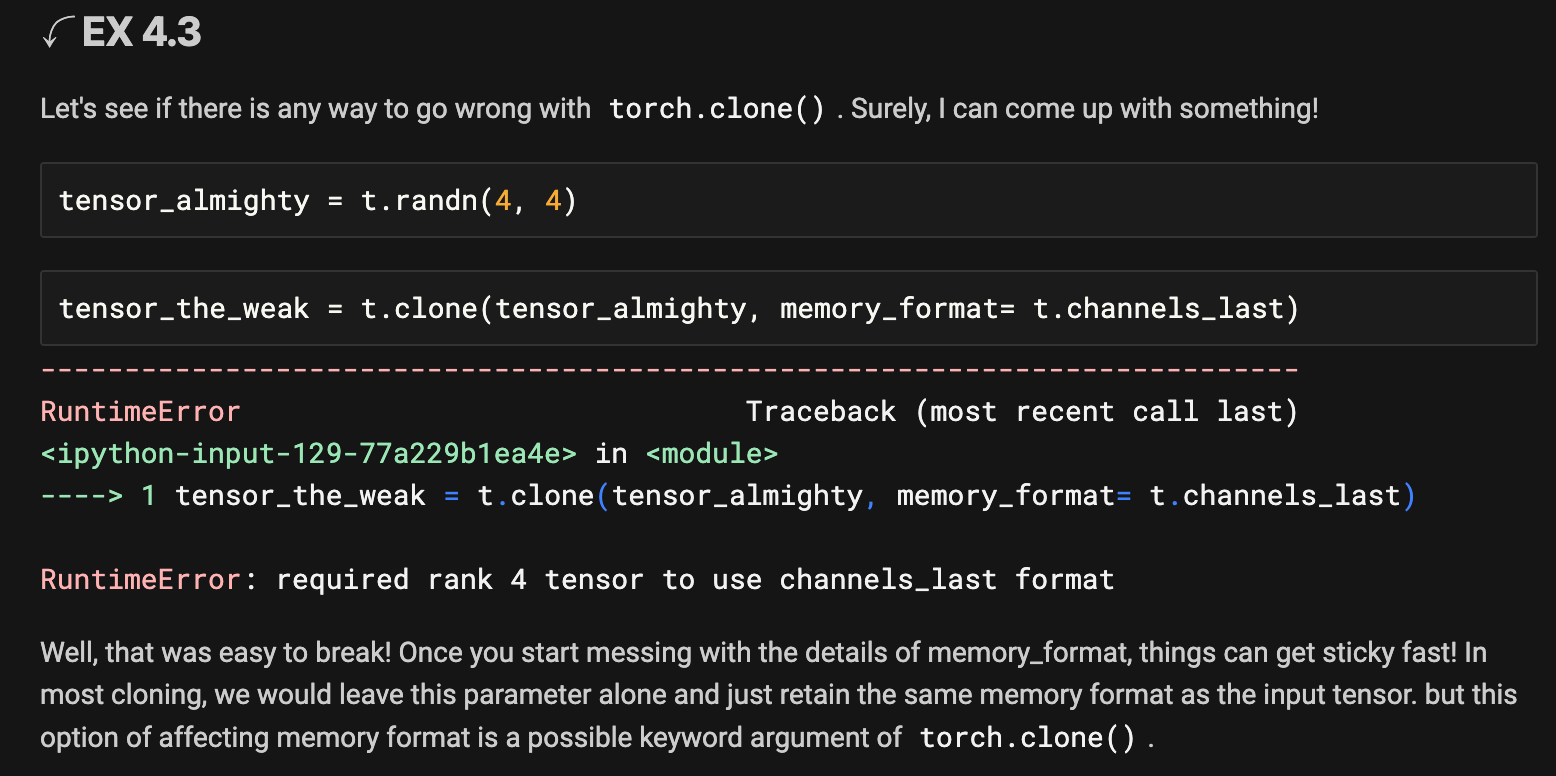

➤ Function 4 Summary:

torch.clone() is an invaluable part of the PyTorch library, just as integral to it as .copy() and .deepcopy() are in Python. It allows us to make truly unique copies of tensors so that we can manipulate those copies wihtout affecting the original. It is a little gem in the PyTorch library!

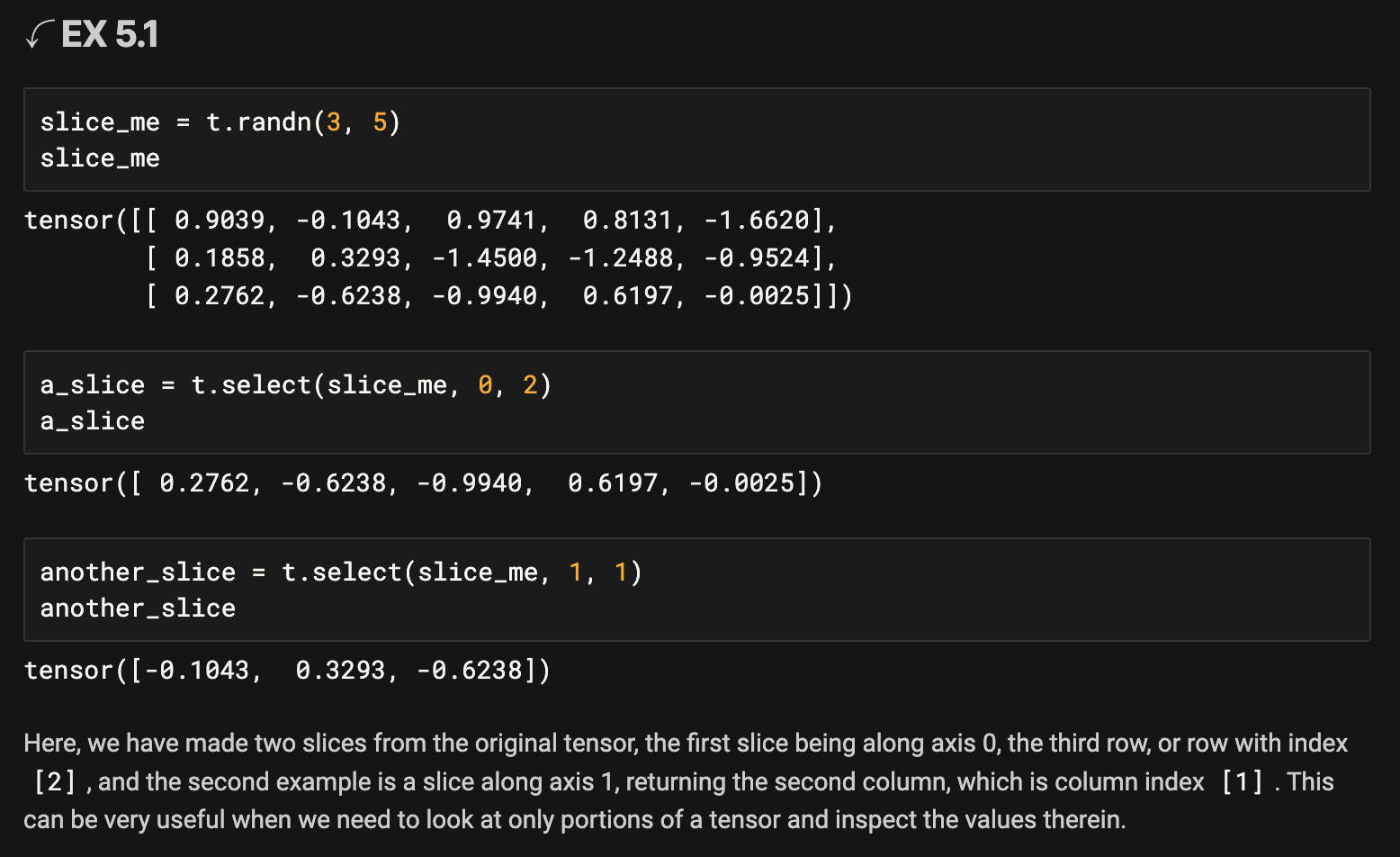

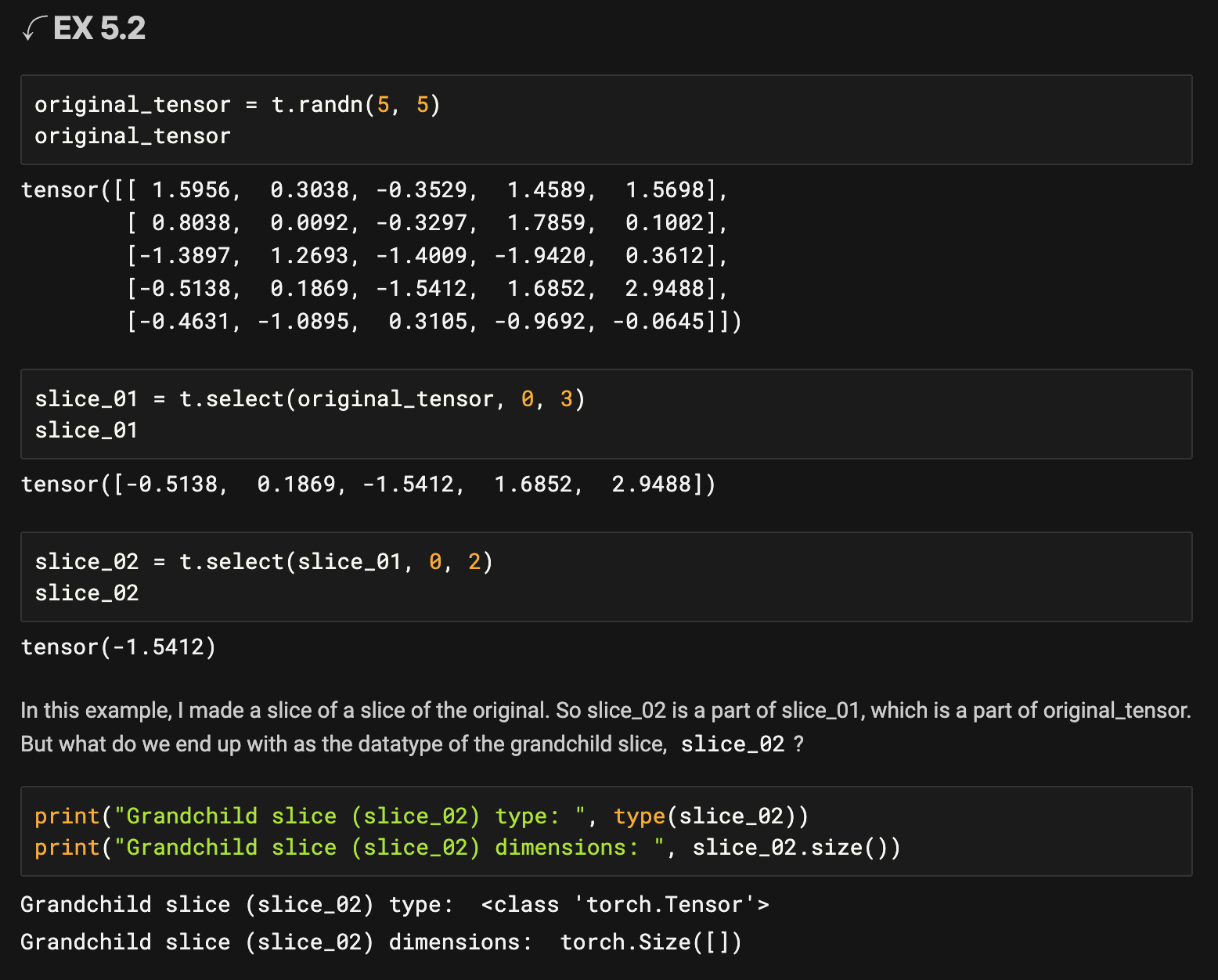

So once we perform a slice of a slice in this way, we end up with just a zero dimensional tensor.

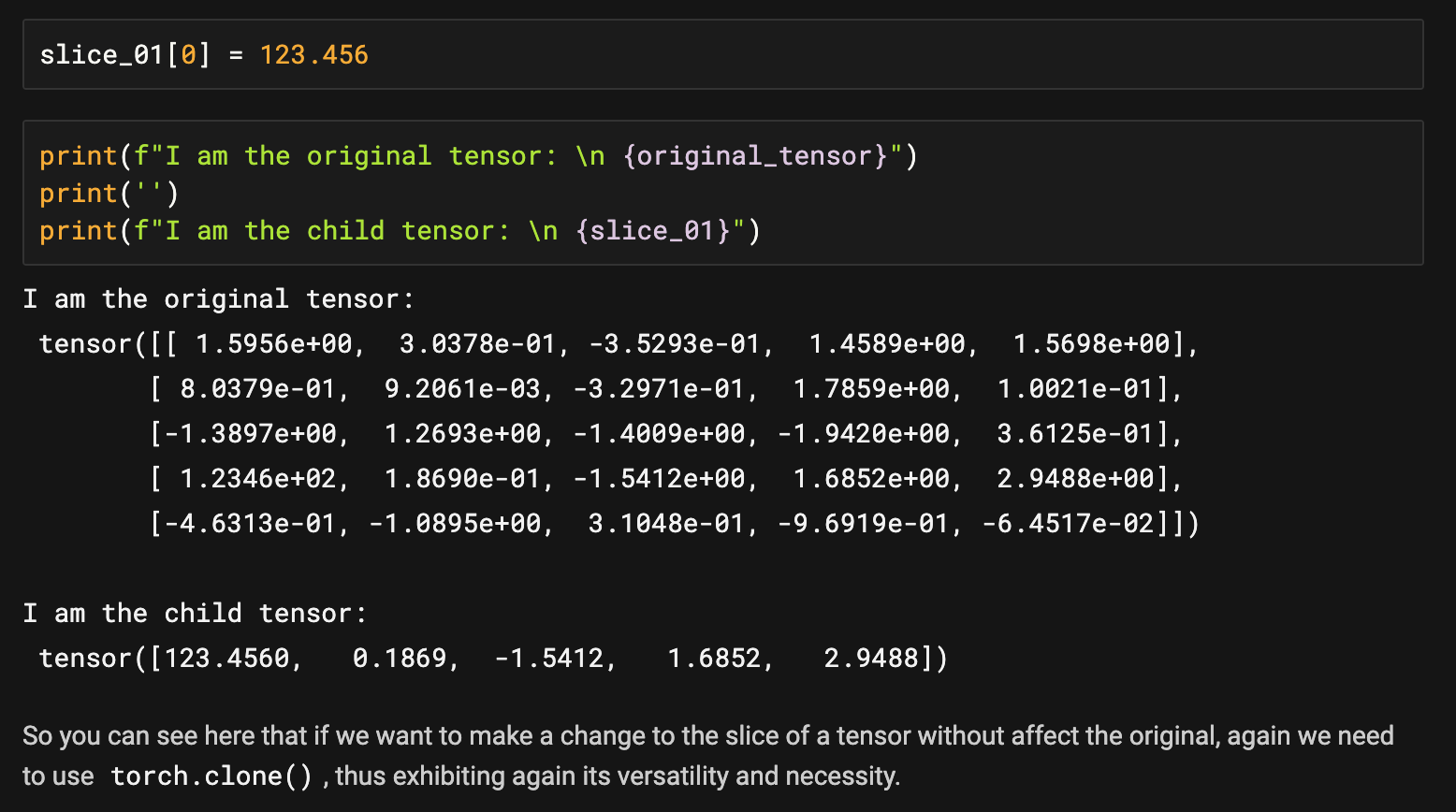

Let's take a brief look at the effects of changing a slice of an original on that original tensor. Because the values returned by torch.select() are views of the original, changes to the slice will affect the original. Let's see this in action. We will change a value in slice_01 and see how it affects its parent.

➤ Function 5 Summary:

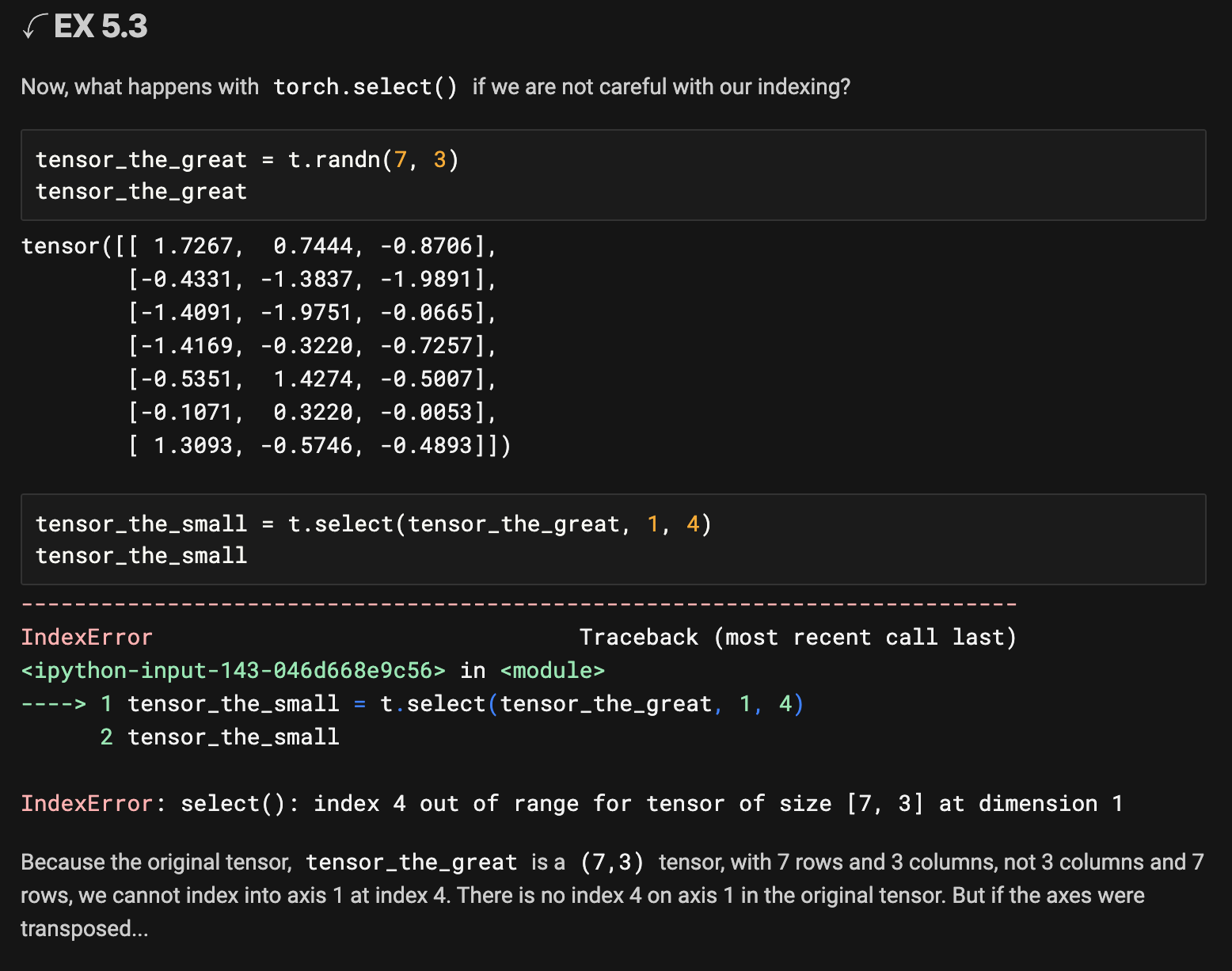

When working with tensors of extremely large proportions, it is important to have functions that can easily return to you the specific sections that you need to view or work with. And while torch.select() is basically the equivalent of indexing into and slicing tensors, I find it to be helpful visually and conceptually when reading code. Seeing the word "select" and the parameters by which values were selected can sometimes be quicker for the brain to process when running through many lines of code and trying to comprehend the inner workings or debug the code.

➢ Conclusion

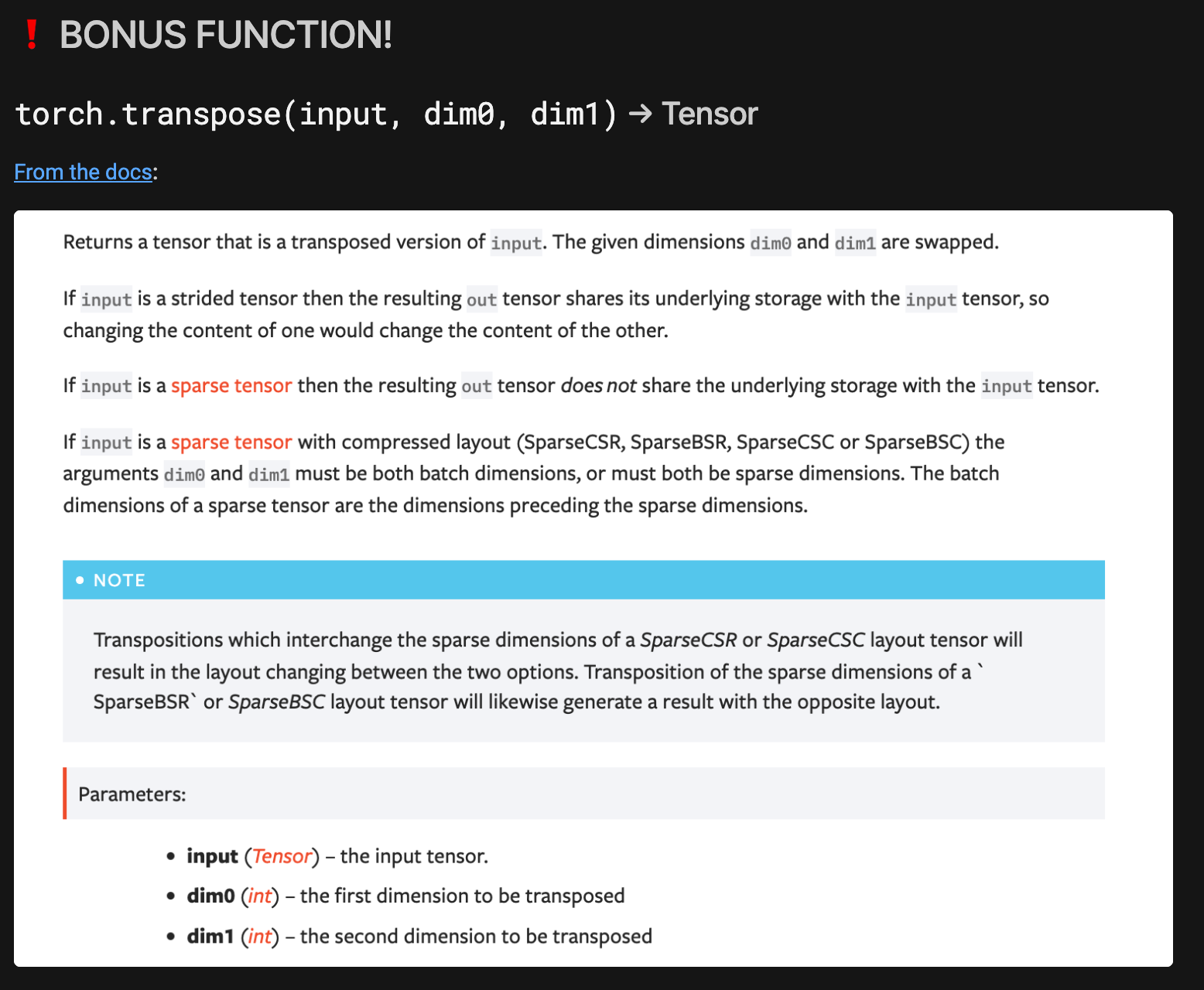

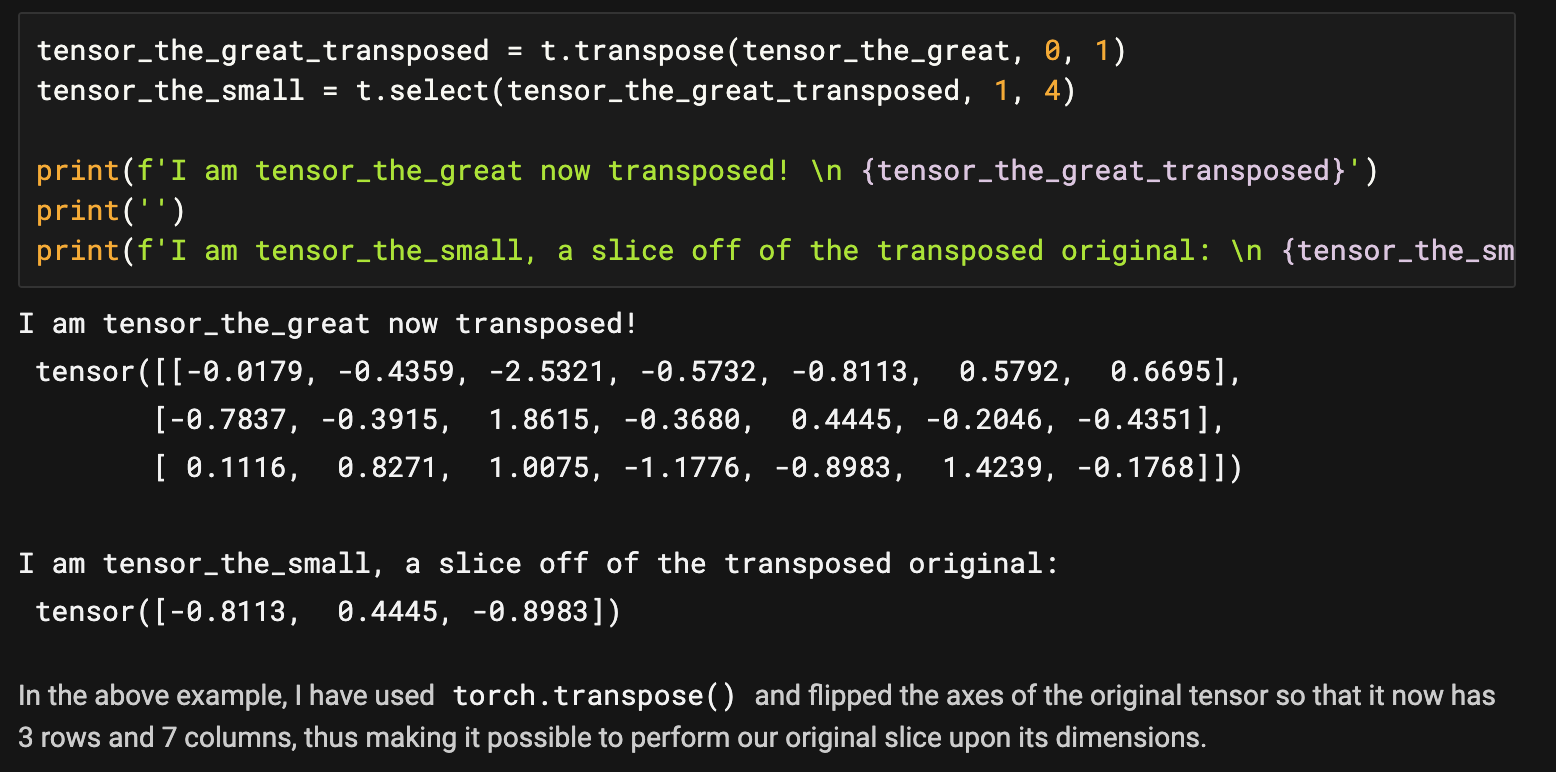

PyTorch is an integral part of deep learning in Python. This library is packed with features and options that make so many necessary aspects of the process of training and implementing neural network models run more smoothly, more quickly, more effectively, and more eloquently. It is so full of utility that it was hard just to choose 5 functions to present here, hence the 6th bonus function. I just could not leave out torch.transpose(). It is too important!

Some other functions from PyTorch that I find to be incredibly useful are:

torch.unique()- returns only the unique values from within a given tensortorch.index_select()- returns the index numbers from the given tensor and values you want the indices fortorch.where()- takes a condition and two tensors and returns a tensor of the elements from the tensors based on the conditiontorch.take()= returns a tensor of the values from the passed tensor and index

There are so many more functions I would love to share! I look forward to creating more projects and presentations wit PyTorch and sharing them! Stay tuned for more exciting projects from my explorations into deep learning!

➢ Reference Links:

In addition to the links and sources cited throughout the project, these are some sources that I found very helpful

- Official documentation for tensor operations: https://pytorch.org/docs/stable/torch.html

- PyTorch Essential Training: Deep Learning

- My Notebook